As a regular practice, I collect links and share them across Elder Research’s Slack during the week. Here are a few worth highlighting this week.

AI ambivalence

This is how I feel using gen-AI: like a babysitter. It spits out reams of code, I read through it and try to spot the bugs, and then we repeat. Although of course, as Cory Doctorow points out, the temptation is to not even try to spot the bugs, and instead just let your eyes glaze over and let the machine do the thinking for you – the full dream of vibe coding.

…

Really, that’s my current state: ambivalence. I acknowledge that these tools are incredibly powerful, I’ve even started incorporating them into my work in certain limited ways (low-stakes code like POCs and unit tests seem like an ideal use case), but I absolutely hate them.

…

[M]aybe my best bet is to continue to zig while others are zagging, and to try to keep my coding skills sharp while everyone else is “vibe coding” a monstrosity that I will have to debug when it crashes in production someday.

I’m glad articulate people exist in the world. Noah comes awfully close to capturing how I feel as well; I’ve been using the work “ambivalent” for a bit, too. Of course lots of people don’t feel this way at all, but it’s worth processing all the same.

All models are wrong

We often hear the George Box quote that “all models are wrong.” I hadn’t heard this one, though, quoted by [Danielle Navarro]:

Since all models are wrong the scientist must be alert to what is importantly wrong. It is inappropriate to be concerned about mice when there are tigers abroad.

I guess it’s from the same paper, too!

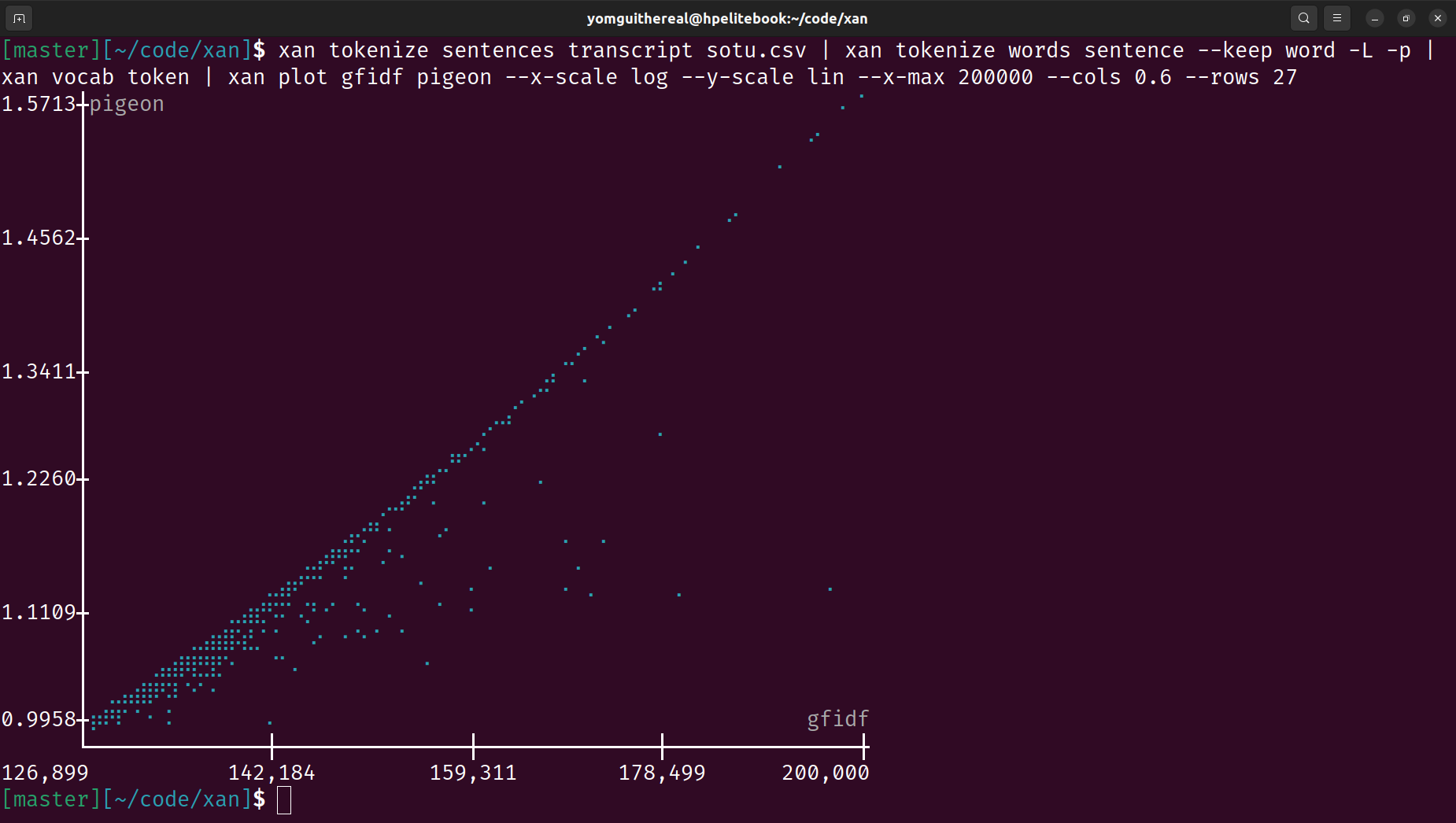

xan, the CSV magician

A fun, and odd, new tool from

SciencesPo’s médialab that make it

easy(ish) to work with CSVs from the command line. It also

includes a bunch of ASCII charting capabilities from right in the

Terminal. Reminds me of tv,

in a good way.

AI in the enterprise isn’t doing so hot

David Gerard writes, summarizing an S&P Global Market Intelligence survey:

AI in the enterprise is failing faster than last year — even as more companies try it out. 60% of companies that S&P spoke to say they “invest” in AI by getting into generative AI. Which usually means subscriptions to LLMs.

But so far in 2025, 46% of the surveyed companies have thrown out their AI proofs-of-concept and 42% have abandoned most of their AI initiatives — complete failure. The abandonment rate in 2024 was 17%.

Something I’m keeping an eye on in my own work.

April Fools check-in

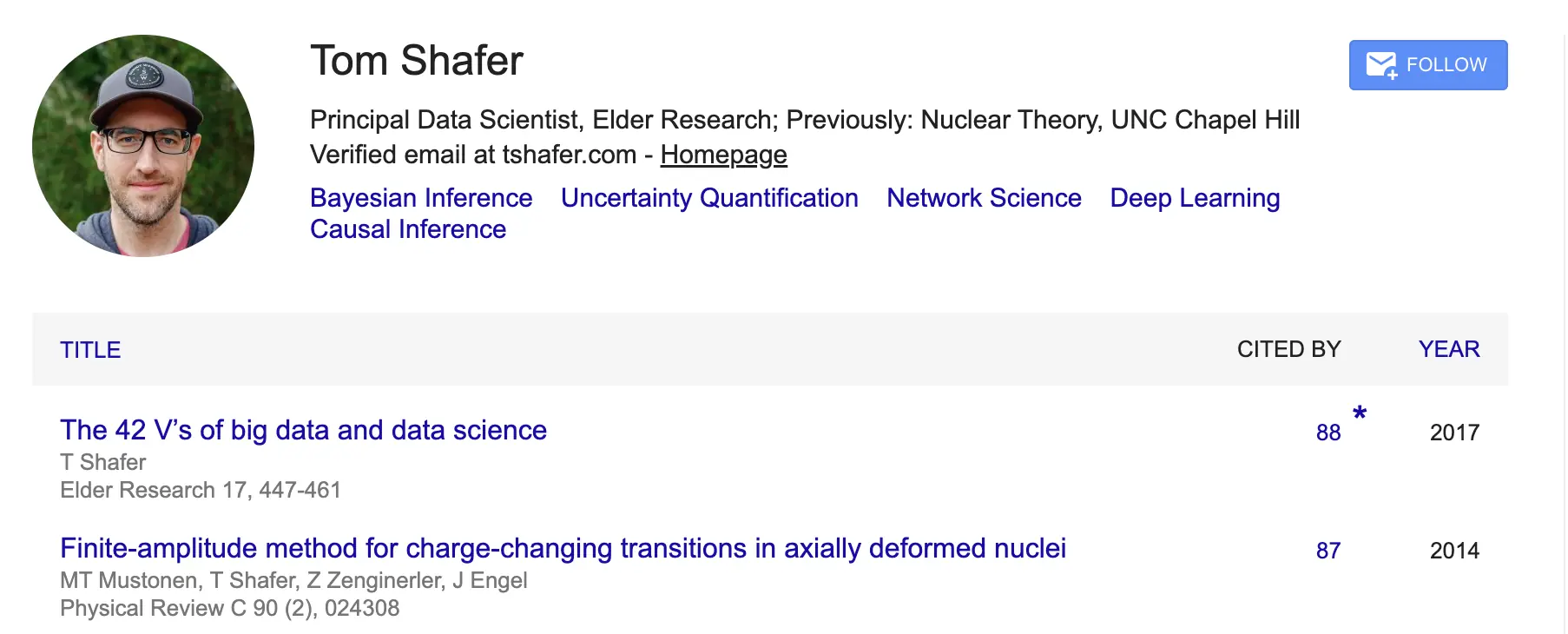

April 1st happened this week, so let’s check in on that random jokey blog post my boss suggested we write eight years ago:

As of now, it’s the most cited thing I’ve ever written. And it was a joke. Take that, Evan.